15 April 2020 – Business organizations have always been about supply networks, even before business leaders consciously thought in those terms. During the first half of the 20th century, the largest firms were organized hierarchically, like the monarchies that ruled the largest nations. Those firms, some of which had already been international in scope, like the East India Trading Company of previous centuries, thought in monopolistic terms. Even as late as the early 1960s, when I was in high school, management theory ran to vertical and horizontal monopolies. As globalization grew, the vertical monopoly model transformed into multinational enterprises (MNEs) consisted of supply chains of smaller companies supplying subassemblies to larger companies that ultimately distributed branded products (such as the ubiquitous Apple iPhone) to consumers worldwide.

The current pandemic of COVID-19 disease, has shattered that model. Supply chains, just as any other chains, proved only as strong as their weakest link. Requirements for social distancing to control the contagion made it impossible to continue the intense assembly-line-production operations that powered industrialization in the early 20th century. To go forward with reopening the world economy, we need a new model.

Luckily, although luck had far less to do with it than innovative thinking, that model came together in the 1960s and 1970s, and is already present in the systems thinking behind the supply-chain model. The monolithic, hierarchically organized companies that dominated global MNEs in the first half of the 20th century have already morphed into a patchwork of interconnected firms that powered the global economies of the first quarter of the 21st century. That is, up until the end of calendar-year 2019, when the COVID-19 pandemic trashed them. That model is the systems organization model.

The systems-organization model consists of separate functional teams, which in the large-company business world are independent firms, cooperating to produce consumer-ready products. Each firm has its own special expertise in conducting some part of the process, which it does as well or better than its competitors. This is the comparative-advantage concept outlined by David Ricardo over 200 years ago that was, itself, based on ideas that had been vaguely floating around since the ancient Greek thinker Hesiod wrote what has been called the first book about economics, Works and Days, somewhere in the middle of the first millennium BCE.

Each of those independent firms does its little part of the process on stuff they get from other firms upstream in the production flow, and passes their output on downstream to the next firm in the flow. The idea of a supply chain arises from thinking about what happens to an individual product. A given TV set, for example, starts with raw materials that are processed in piecemeal fashion by different firms as it journeys along its own particular path to become, say, a Sony TV shipped, ultimately, to an individual consumer. Along the way, the thinking goes, each step in the process ideally is done by the firm with the best comparative advantage for performing that operation. Hence, the systems model for an MNE that produces TVs is a chain of firms that each do their bit of the process better than anyone else. Of course, that leaves the entire MNE at risk from any exogenous force, from an earthquake to a pandemic, which distrupts operations at any of the firms in the chain. What was originally the firm with the Ricardoan comparative advantage for doing their part, suddenly becomes a hole that breaks the entire chain.

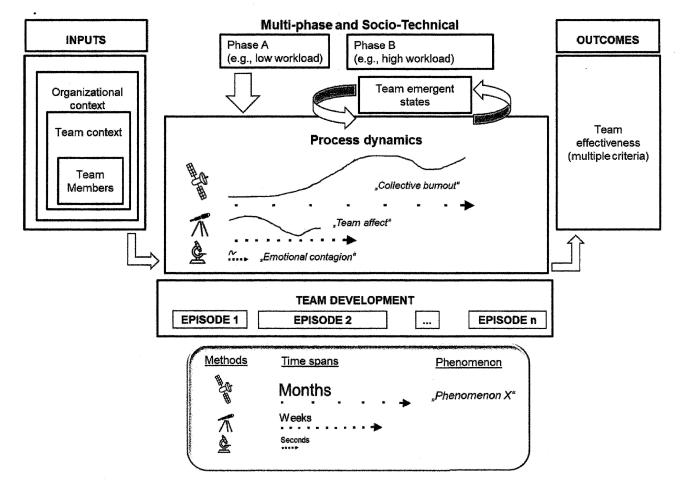

Systems theory, however, provides an answer: the supply network. The difference between a chain and a network is its interconnectedness. In network parlance, the firms that conduct steps in the process are called nodes, and the interconnections between nodes are called links. In a supply chain, nodes have only one input link from an upstream firm, and only one output link to the next firm in the chain. In a wider network, each node has multiple links into the node, and multiple links out of the node. With that kind of structure, if one node fails, the flow of products can bypass that node and keep feeding the next node(s) downstream. This is the essence of a self-healing network. Whereas a supply chain is brittle in that any failure anywhere breaks the whole system down, a self-healing network is robust in that it single-point failures do not take down the entire system, but cause flow paths to adjust to keep the entire system operating.

The idea of providing alternative pathways via multiple linkages flies in the face of Ricardo’s comparative-advantage concept. Ricardo’s idea was that in a collection of competitors producing the same or similar goods, the one firm that produces the best product at the lowest cost drives all the others out of business. Requiring simultaneous use of multiple suppliers means not allowing the firm with the best comparative advantage to drive the others out of business. By accepting slightly inferior value from alternative suppliers into the supply mix, the network accepts slightly inferior value in the final product while ensuring that, when the best supplier fails for any reason, the second-best supplier is there, on line, ready to go, to take up the slack. It deliberately sacrifices its ultimate comparative advantage as the pinnacle of potential suppliers in order to lower the risk of having its supply chain disrupted in the future.

This, itself, is a risky strategy. This kind of network cannot survive as a subnet in a larger, brittle supply chain. If its suppliers and, especially, customers embrace the Ricardo model, it could be in big trouble. First of all, a highly interconnected subnet embedded in a long supply chain is still subject to disruptions anywhere else in the rest of the chain. Second, if suppliers and customers have an alternative path through a firm with better comparative advantage than the subnet, Ricardo’s theory suggests that the subnet is what will be driven out of business. For this alternative strategy to work, the entire industry, from suppliers to customers, has to embrace it. This proviso, of course, is why we’ve been left with brittle supply chains decimated by disruptions due to the COVID-19 pandemic. The alternative is adopting a different, more robust paradigm for global supply networks en masse.